AI headphones driven by Apple M2 can translate multiple speakers at once

Google’s Pixel Buds have had an impressive real-time translation feature for some time now. Over the last few years, companies like Timkettle have also released similar earbuds aimed at business users. However, all these solutions can only process one audio stream at a time for translation.

But researchers at the University of Washington (UW) have created something truly groundbreaking—AI-powered headphones that can translate multiple speakers simultaneously. Imagine a polyglot in a noisy bar, effortlessly understanding everyone around them speaking different languages, all at once.

The team calls their invention “Spatial Speech Translation,” and it works using binaural headphones. For those unfamiliar, binaural audio mimics how human ears naturally perceive sound. To capture this effect, microphones are placed on a dummy head, spaced the same distance apart as human ears.

This method is crucial because our ears don’t just detect sound—they also help us locate where it’s coming from. The goal is to create a natural, immersive soundstage with a stereo effect, almost like being at a live concert. Or, in today’s terms, spatial listening.

The project was led by Professor Shyam Gollakota, whose past work includes underwater GPS for smartwatches, turning beetles into photographers, brain implants that interact with electronics, and even a mobile app that can detect infections.

How does multi-speaker translation work?

“For the first time, we’ve preserved each speaker’s voice and the direction it’s coming from,” explains Gollakota, who teaches at UW’s Paul G. Allen School of Computer Science & Engineering.

The system works like a radar—it detects how many speakers are nearby and updates in real-time as people move in and out of range. Everything happens on the device itself, so no voice data gets sent to the cloud for translation. (Great for privacy!)

Besides translating speech, the system also “maintains the tone and volume of each speaker’s voice.” It even adjusts for movement, tweaking direction and audio intensity as speakers walk around. Interestingly, Apple is reportedly working on a similar real-time translation feature for AirPods.

How was it developed?

The UW team tested the AI headphones in various indoor and outdoor environments. The system processes and delivers translated audio in 2-4 seconds. Testers preferred a slight delay (3-4 seconds), but the team is working to speed things up.

So far, the headphones support Spanish, German, and French, but more languages may be added. The researchers combined blind source separation, localization, real-time expressive translation, and binaural rendering into one seamless process—an impressive technical achievement.

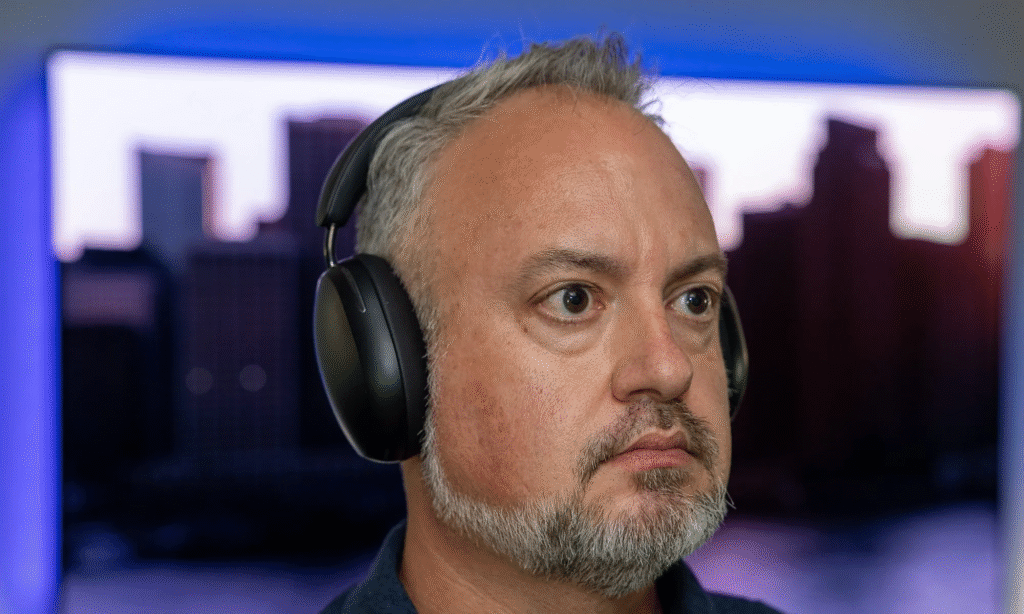

For hardware, they used a speech translation model running in real-time on an Apple M2 chip, paired with Sony WH-1000XM4 noise-cancelling headphones and a Sonic Presence SP15C binaural USB mic.

And here’s the best part: “The code for the proof-of-concept device is available for others to build on,” says UW’s press release. This means the open-source community can take UW’s work and develop even more advanced projects.